Printing secret value in Databricks - Stack Overflow

Nov 11, 2021 · First, install the Databricks Python SDK and configure authentication per the docs here. pip install databricks-sdk Then you can use the approach below to print out secret …

Databricks: managed tables vs. external tables - Stack Overflow

Jun 21, 2024 · While Databricks manages the metadata for external tables, the actual data remains in the specified external location, providing flexibility and control over the data storage …

REST API to query Databricks table - Stack Overflow

Jul 24, 2022 · Update: April 2023rd. There is a new SQL Execution API for querying Databricks SQL tables via REST API. It's possible to use Databricks for that, although it heavily …

Databricks: How do I get path of current notebook?

Nov 29, 2018 · The issue is that Databricks does not have integration with VSTS. A workaround is to download the notebook locally using the CLI and then use git locally. I would, however, …

Unity catalog not enabled on cluster in Databricks

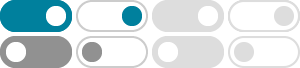

Nov 25, 2022 · I found the problem. I had used access mode None, when it needs Single user or Shared. To create a cluster that can access Unity Catalog, the workspace you are creating the …

databricks - DLT - Views v Materialized Views syntax and how to …

Mar 25, 2024 · I'm creating a DLT pipeline using the medallion architecture. In Silver, I used CDC/SCD1 to take the latest id by date which is working fine but I had a question on the …

How to to trigger a Databricks job from another Databricks job?

Jul 31, 2023 · Databricks is now rolling out the new functionality, called "Job as a Task" that allows to trigger another job as a task in a workflow. Documentation isn't updated yet, but you …

Databricks - Download a dbfs:/FileStore file to my Local Machine

In a Spark cluster you access DBFS objects using Databricks file system utilities, Spark APIs, or local file APIs. On a local computer you access DBFS objects using the Databricks CLI or …

Installing multiple libraries 'permanently' on Databricks' cluster ...

Feb 28, 2024 · Easiest is to use databricks cli's libraries command for an existing cluster (or create job command and specify appropriate params for your job cluster) Can use the REST …

Databricks Permissions Required to Create a Cluster

Nov 9, 2023 · So, having the "Can Manage" permission basically means you've got the highest level of control when it comes to handling clusters in Azure Databricks. As per Official …